Using CodePipeline to automate backend deployment

This blog is Part 2 of the series of blogs on moving your backend to production. Do read Part 1 for more context!

As you start setting up your production environment, you will quickly realize that it’s easier to automate the deployment process, rather than doing it manually.

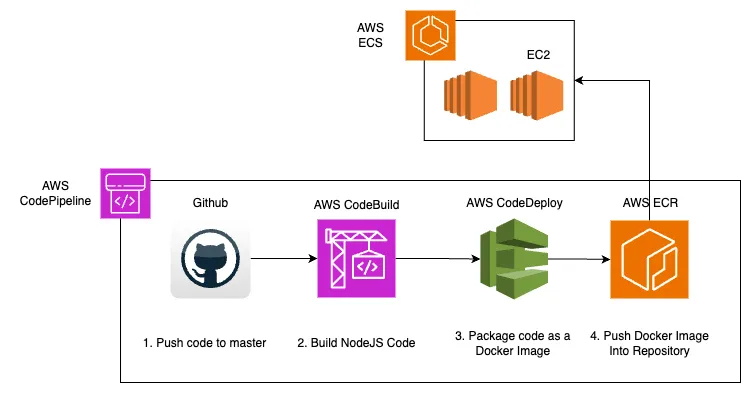

That’s where CodePipeline ↗️ would come into picture. We will automate our CI/CD pipeline to ensure that whenever any new code is being committed to the master branch, we will trigger auto deployment of the new code into the production environment.

To enable this setup, let me also introduce you to a few other AWS services:

CodePipeline

This will get triggered whenever a new code is being pushed into master. There are three stages in this pipeline:

- Developer commits to master branch and this triggers the pipeline

- Build stage - This will pull the code from the master branch and build the nodeJS application

- Deploy stage - This will package the built application into a docker image and push it to ECR (A container registry where the docker image is stored)

ECS (Elastic Container Service) ↗️

This is a orchestrator that takes the docker image, spuns up the EC2 instances(or Fargate instances) and installs the NodeJS application into the EC2 machines. There are many advantages of using ECS:

- It is easy to scale up and down the number of instances. One can also setup auto-scaling so that it can increase/decrease containers based on the traffic.

- It is easy to use and configure when compared to EKS (Elastic Container Service for Kubernetes)

- It will automatically checks the health of the containers and if any container is down, it will automatically spin up a new one.

Considerations for your backend

If this is the first time you are configuring Docker for your backend, these are a few things to consider:

- Make sure environment variables are not hardcoded and are managed by environment files. Checkout Dotenv package ↗️ . After implementing the different environments, test it locally.

- Set up different commands in package.json to run the application in different environments. This will document the enviroments and also makes it easy to run different environments.

- If your backend has to run migrations, you can start off by setting up the migration script to run before the application starts. This is risky and hence it is a good idea to setup a few other things to prevent data loss:

- Database is backed up regularly

- Migrations are tested in staging environment before deploying to production

- You have a process to rollback the migrations in case of any issues.

Do let me know in the comments if you have any questions!

Comments